Missed connections or The case for structured global scientific repository

Preamble - off to Hawaii

It's a cold winter in Chicago, Lake Michigan is freezing over. You decide that it is time to escape, some place nice and warm -- say, Hawaii.

You go online to your favorite booking service, do a search and minutes later you have the flights and a hotel booked. That seemingly simple action precipitates a cascade of events (transmission of requests over the internet, processing by a booking system (search within their system, contacting airline, confirmation), transfer of confirmation back your browser, ...) -- none of which you need to understand to use the system successfully.

The day of departure, excitedly you rush to the airport. You take a cab. You don't need to know who the driver is, what car he drives, or how the internal combustion engine works. Regardless of these details you can be confident that with very high probability you will make it to the airport on time.

O'Hare Airport. Every day thousands of planes take off and land. Air traffic controllers, aided by computers, direct the traffic flow, not needing to know the pilots' names or colors of their airplanes. The pilots respond to their directives, adjusting the controls of their airplanes, without giving much thought to the full complexity of the aerodynamic, hydraulic, and combustive processes that their actions effect.

You go past security, get on the plane, sink into your somewhat comfortable seat, and close your eyes, in anticipation of the pleasant journey...

After landing in Honolulu, you take a Wiki-Wiki bus to another terminal to catch the local plane to Maui. Unfortunately, the bus is too old and breaks down on the way -- and you miss the connection.

Brief summary

The power of encapsulation

Encapsulation makes even the most complex tasks manageable. It also leads to multiple levels of abstraction that allow us equally easily manipulate high- and low-level concepts. Having logical acyclic connections between modules is important to avoid paradoxes.Scientific theory as compression mechanism

Science provides a way to effectively and reliably compress large variety of phenomena into simple predictive rules. Theories are modules that have limited domains of validity where they have been tested.Good theory is a hierarchical theory

It is desirable for theories to have nested structure -- simpler (easier to use) theories are limiting cases of other, more general and accurate, ones (example: Geometric Optics is a limiting case of wave optics). Such a hierarchy may be a reflection of the way humans think, or how the nature actually is -- or both.Publication rate acceleration

Publications are the way science is currently recorded and communicated.

The number of science publications per year is exploding -- faster than exponentially!Publications and knowledge

Even though there several millions of publication coming out every year, there is a growing disconnect with the actual amount of knowledge created; a large fraction of published papers are never cited or even read.Is technology to blame?

Technology made publishing easier than ever. However, finding the right content, making sure that all potentially relevant papers are examined, is getting progressively harder.How do scientist cope with the information explosion?

By going back to the basics: personal discussions and conferences. But those are not perfect either: even when slides, audio, videos records are preserved, they are arduous to mine.A technological solution to filtering and structuring knowledge

A global hierarchical knowledge base, composed of compact logically connected nodes realizing part-of (from basic to more specialized) relationships may be an efficient way to distill knowledge from information, to learn, and to collaborate.Powered by Humans

The system can grow at the "periphery" through work of experts at the research frontier. Educators and students can contribute to building the higher levels of the hierarchy. There are at least two major incentives that such a system would present to users: (1) global, but targeted, work visibility and (2) convenient environment for learning and private collaborations.Open questions

The usefulness of hierarchical knowledge graph structure needs to be tested. As the amount of accumulated knowledge grows, will it remain easy to navigate and extend?

It is crucial for the reliability and the health of the repository to have participation of professional researchers and scientists. Ideally, they should not only use the system for their private projects and occasional contributions to the deepest level of hierarchy, but also to improve the content and structure at the higher levels of the content. Will they feel sufficiently motivated to do so?

Both the fundamental and specific rules of governance that would lead to a healthy balance of innovation and quality need to be defined. In fact, the collaborative system outlined above can itself be used to develop them based on the community input.

Full version

The power of encapsulation

As we go through out daily lives, we don't need to work too hard to accomplish very complex tasks. The reason is modularity: Complex tasks, such as flying an airplane, are composed of subtasks -- maintenance, communication, piloting; which in turn further decompose into yet smaller subtasks, ultimately down to the level simply manageable by an individual.

The encapsulation leads to multiple levels of abstraction that encode "part-of/specific case of" relationships between modules. The existence of multiple levels of abstraction allows us to equally easily manipulate concepts that relate to our immediate needs (food, sleep, entertainment) and the meta-concepts of sustainability of life on Earth, or importance of education. When relationships between modules are not well defined, paradoxes of the Catch-22 type may occur.

Scientific theory as compression mechanism

The goal of science is to provide an encapsulation and compression mechanism for the valuable knowledge generated collectively by the human kind. A good theory not only explains a particular phenomenon, but can predict how the outcome will change if something is altered at the input, thus providing a compressed understanding of a range of phenomena.

For instance, before the laws of kinematics were fully understood, trajectory of every tossed rock may have been seen as completely unique and surprising, perhaps even deserving detailed recording and classification. After Newton, the magic was lost. "Give me initial position and velocity -- and I will predict the motion!" Even without knowing that the acceleration is caused by attraction between an object and Earth, this is still a good predictive theory that compresses the knowledge of the infinite number of possible trajectories into a simple computational algorithm.

Good theory is a hierarchical theory

Kinematics is subsumed by a higher-level theory that recognizing that acceleration is caused by forces, which themselves can be of different types (electromagnetic, gravitational, etc). At higher level yet, all of the above fit within the framework of the General Theory of Relativity. The encapsulation at different levels of abstraction allows us to efficiently calculate the motion of a pendulum, without worrying about the curvature of space-time.

Such hierarchical -- nested -- structure of existing theories may not only be a matter of our perception, but may indeed be the way the physical world is.

Publication rate acceleration

The currently accepted way to record scientific advances is by publishing research articles in scientific journals. This has been the case at least since 1650. The rate of publishing has been steadily increasing ever since. Between 1980 and 2012, 38 million papers were published (in natural and social sciences, health, humanities, and arts). It is to be expected that the publication rate should be increasing exponentially, as knowledge spurs more knowledge. Remarkably, however, in 18th century the growth rate was only 1%/year, between the two World Wars it was 2-3%, and reached 8-9%/year currently[1]. The rate of publication growth is super-exponential!

Publications and knowledge

Does the rapid growth of publication rate imply that the amount of accumulated knowledge is growing equally rapidly? Unlikely so. In 1965, D. de Solla Price stated "I am tempted to conclude that a very large fraction of the alleged 35,000 journals now current must be reckoned as merely a distant background noise, and as very far from central or strategic in any of the knitted strips from which the cloth of science is woven".[2] This is a harsh statement, which may or may not be true. Yet the question stands -- why is the publication rate so high? Part of the explanation may lie in the present-day academic "publish-or-perish" culture, which promotes incremental over-publication. However, there is likely a deeper problem.

The consequence of very high publication rate is that literature is bloated, hard to follow, which causes many scientist to give up on doing all the necessary background search before diving into their own projects, and producing their own -- fingers crossed -- novel results. Those, however, are often destined to be missed by the target audience, just like the authors are likely to have missed prior relevant work by others. Indeed, significant fraction of published articles are never cited by others, or even read.[3] How can this vicious cycle be broken?

Is technology to blame for publication rate explosion?

There is little doubt that the rapid increase of publication rate would be impossible without technological developments of last decades. Computers, word processors, and a myriad of online journals have made publishing easier than ever before. In the past, a manuscript had to be hand-written, calculations and graphs often done by hand, and correspondence with journal was through regular mail. It stands to reason that researchers used to be more selective regarding what is worth publishing, and what is not.

Scientific enterprise of the present day has features of a gold rush -- equipped with new computing, data-mining, and publishing tools, researchers have ramped up their output. Preparing a manuscript, and finding a willing outlet for it, has become easier than ever before.

However, the problem that remains unsolved, and in fact exacerbated, is the access to the target audience. Here technology has been lagging. Even though Web of Science or Google Scholar can quickly search through millions of published papers, they cannot eliminate the massive redundancy across the results. The distillation of unique content is an arduous and time-consuming process. Back to the analogy with the gold rush, there might be a lot of gold in the mined ore, but to extract it is not an easy task.

How do scientist cope with the information explosion?

In the face of the overwhelming production rate of scientific content -- what do scientist do? Paradoxically, they more and more turn back to the classic ways of communicating and learning new ideas and results -- personal interaction and conference presentations, instead of flipping through journals. Being at a top university or research institute, the flux of students and speakers largely obviates the need to read new papers. Similarly, going to a conference, and being able to hear presentations and ask speakers pointed questions is an efficient way to learn. At well-run conferences, plenary overview talks fulfill the need of providing the roadmap of various topics and subtopics.

As a public good, the conference format, however, is not ideal either: The majority of the communications and discussions that transpire are ephemeral, since typically no care is taken to record them, even if the participants do not object and see the value in having those preserved. And even when there is a full record of presentations and accompanying discussions -- the Kavli Institute for Theoretical Physics being a prominent example -- mining that information is still a daunting task.

A technological solution to filtering and structuring knowledge

Perhaps in the not-too-distant future Artificial Intelligence will reach a level when computers will be able to take all the corpus of written, audio and video information, and distill it, eliminating all superfluous and erroneous information. But is there a way to solve the informational deluge problem today, without needing to wait for the rescue by AI? Can't already existing technology benefit the way we do science?

The main problems that need to be solved are excessive redundancy and inefficient communication. These can be addressed by having a single structured system -- a repository -- where all the publications (more precisely, their unique results) and ideas find their place, in relation to all the other content. Each individual contribution, should be available to be discussed or challenged.

The key for the usefulness of such system both for readers and authors is for it to have a hierarchical graph structure, with higher-level modules -- nodes -- providing the overview of the lower-level ones. Such hierarchy would enable beginners and managers to stay at the high "Scientific American" level, while experts, would be able to quickly navigate to arbitrary level of depth (detail).

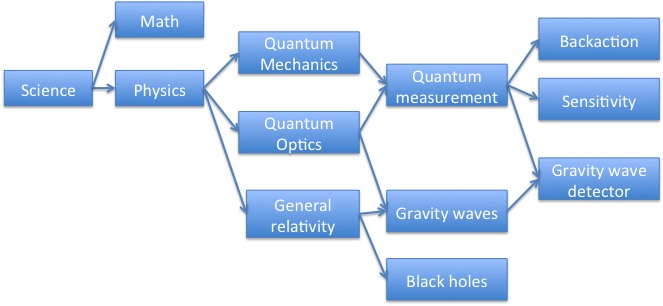

An appropriate highest level module could be Science, while at the lowest level, one could find, for example, the detailed

discussion of the mechanics of LIGO gravitational wave interferometer. A directed pathway in this case could be something like this:

While this kind of knowledge graph structure differs substantially from the standard linear textbook or article format, its implementation, scalable to arbitrary size, is straightforward. The simple "design rule" for the system is that to understand any node, the user is expected to understand all its ancestors, but not siblings or descendants. This would make navigation and learning from such system highly efficient.

Powered by Humans

How can a global hierarchical knowledge repository grow?

At the deepest level it can grow form the private projects developed in closed collaborations, who may then choose to

"publish" their knowledge sub-graph by fitting it into the public hierarchy. The higher public levels can benefit from the work of students and educators, in the way that Wikipedia is currently built. With time, the content at the "research front" will become part of the "establishment", sprouting new knowledge, ad infinitum.

Why would scientists of today, working under the publish-or-perish conditions choose to spend their time to contribute to such a system? One reason is that by placing their research, even already published, into correct context, they position it to be seen by the relevant fellow researchers. This is the visibility and recognition benefit.

In addition, they may find that research and private collaborations in a system of this kind are more efficient. This is the work efficiency benefit. The two benefits are interconnected, since making selected parts of their research visible to the public would require only minimal effort, if it is organized in a modular hierarchical fashion to begin with.

Open questions

As appealing as it may sound, organizing all scientific knowledge into a global structured repository is a complex task. The most basic question is whether a directed graph structure is simultaneously flexible and rigid enough to efficiently map out knowledge modules (nodes) and connections between them in a way that is easy to build and natural to use.

There are also important sociological questions. Will the members of scientific community be willing to put their time into building and maintaining such a system? What type of incentives, besides visibility and personal convenience, would make high-quality public contributions more likely? Can a reputation system play a role of an incentive? Is there a need for "law-enforcement" and "publication" approval by high-reputation experts?

These questions can only be answered with sufficient usage, and by experimenting with different Constitutions. Interestingly, those themselves could be designed and refined by the community from within the system.

Epilogue

In 1965, D. de Solla Price wrote that in certain fields

the traditional procedure has been to systematize the added knowledge from time to time in book form, topic by topic, or to make use of a system of classification optimistically considered 'more or less eternal, as in taxonomy and chemistry. If such classification holds over reasonably long periods, one may have an objective means of reducing the world total of knowledge to fairly small parcels in which the items are found to be in one-to-one correspondence with some natural order.[2]

Will we be able to take advantage of the powerful new technologies that emerged since then to realize this vision?